Simplify Domino installation on Ubuntu

As usual: YMMV

Posted by Stephan H Wissel on 30 December 2010 | Comments (5) | categories: IBM Notes Linux Lotus Notes

Usability - Productivity - Business - The web - Singapore & Twins

Posted by Stephan H Wissel on 30 December 2010 | Comments (5) | categories: IBM Notes Linux Lotus Notes

they started bogging their grandfather to teach them how to solder (luckily he is a retired electrician) and are looking forward to bring that CT Bot to live. They even might pick-up some German along the way.

they started bogging their grandfather to teach them how to solder (luckily he is a retired electrician) and are looking forward to bring that CT Bot to live. They even might pick-up some German along the way.Posted by Stephan H Wissel on 30 December 2010 | Comments (1) | categories: After hours Twins

burnChart("divWhereToDraw",widthInPix,heightInPix,DataAsArrayOfArrays, RemainingUnitsOfWork, displayCompletionEstimateYesNo); where data comes in pairs of UnitsWorked, UnitsAddedByChangeRequests. Something like var DataAsArrayOfArrays= 10,0],[20,0],[20,5],[20,30],[20,0. It is up to you to give the unit a meaning. The graphic automatically fills the given space to the fullest. If RemainingUnitsOfWork is zero it will hit the lower right corner exactly. I call my routine from this sample script:

Posted by Stephan H Wissel on 29 December 2010 | Comments (1) | categories: Software

Posted by Stephan H Wissel on 28 December 2010 | Comments (2) | categories: Software

Posted by Stephan H Wissel on 28 December 2010 | Comments (0) | categories: Software

Posted by Stephan H Wissel on 27 December 2010 | Comments (8) | categories: Lotusphere

Posted by Stephan H Wissel on 23 December 2010 | Comments (2) | categories: Show-N-Tell Thursday

Posted by Stephan H Wissel on 21 December 2010 | Comments (6) | categories: Show-N-Tell Thursday

Posted by Stephan H Wissel on 18 December 2010 | Comments (1) | categories: Show-N-Tell Thursday

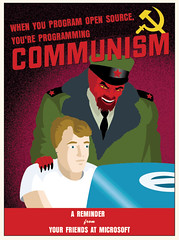

Linux is getting traction in China despite the fact that you can buy a (pirated) Windows CD for a dollar a pop. There is Red Flag, a Chinese incarnation of Fedora and a trend for more large Chinese companies to join the Linux Foundation. In November China Mobile Communications Corporation (CMCC), the world largest mobile operator joined the foundation as Gold Member followed by Huawei, the makers of network gear and mobile devices (We all love the E5) joining in December. This highlights 2 interesting trends: Huawei and CMCC are both Telecom players. Huawei builds networks, CMCC runs networks. Secondly China is striving to transform from the world's workbench to the world's R&D centre. Judging from my interaction with my Chinese colleagues I would say: they are on the right trajectory. So is it now time to brush up your Chinese and join the Linux Foundation yourself and time for IBM to revisit Domino Designer on Linux?

Linux is getting traction in China despite the fact that you can buy a (pirated) Windows CD for a dollar a pop. There is Red Flag, a Chinese incarnation of Fedora and a trend for more large Chinese companies to join the Linux Foundation. In November China Mobile Communications Corporation (CMCC), the world largest mobile operator joined the foundation as Gold Member followed by Huawei, the makers of network gear and mobile devices (We all love the E5) joining in December. This highlights 2 interesting trends: Huawei and CMCC are both Telecom players. Huawei builds networks, CMCC runs networks. Secondly China is striving to transform from the world's workbench to the world's R&D centre. Judging from my interaction with my Chinese colleagues I would say: they are on the right trajectory. So is it now time to brush up your Chinese and join the Linux Foundation yourself and time for IBM to revisit Domino Designer on Linux?Posted by Stephan H Wissel on 15 December 2010 | Comments (0) | categories: Linux

Posted by Stephan H Wissel on 12 December 2010 | Comments (2) | categories: After hours Linux

Posted by Stephan H Wissel on 12 December 2010 | Comments (1) | categories: After hours

Posted by Stephan H Wissel on 12 December 2010 | Comments (5) | categories: After hours

faceContext.getResponseWriter() in the afterRenderResponse event, while you call the facesContext.getOutputStream() in the beforeRenderResponse event (remember only one please!)writer.close(); at the end. Starting with 8.5.2 this actually throws an errorPrint, so the temptation is rather high to use writer.write("..."). This misses an opportunity to take advantage of code other people have written and debugged. Let a SAX Transformer take care of encoding, name spaces and attribute handling. Use this sample as a starting point (yes SAX can be used to create a document - use a Stack to control nesting). If you have a complicated result you could use a DOM object to render your result which has the advantage that you can assemble it in a non-linear fashionPosted by Stephan H Wissel on 09 December 2010 | Comments (0) | categories: XPages

Posted by Stephan H Wissel on 07 December 2010 | Comments (3) | categories: GTD

Posted by Stephan H Wissel on 07 December 2010 | Comments (1) | categories: Lotusphere

Posted by Stephan H Wissel on 06 December 2010 | Comments (1) | categories: Business Software

Posted by Stephan H Wissel on 06 December 2010 | Comments (0) | categories: After hours

Posted by Stephan H Wissel on 03 December 2010 | Comments (2) | categories: After hours

Posted by Stephan H Wissel on 01 December 2010 | Comments (6) | categories: Show-N-Tell Thursday